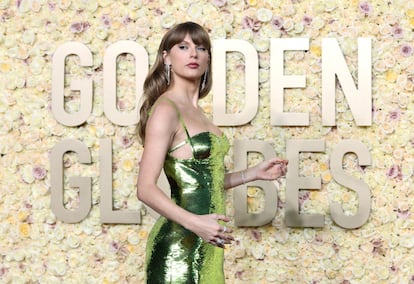

X pauses some Taylor Swift searches as deepfake explicit images spread

Sexually explicit fake images of Swift began circulating last week on X, making her the most famous victim of a scourge that tech platforms have struggled to fix

Elon Musk’s social media platform X has blocked some searches for Taylor Swift as pornographic deepfake images of the singer have circulated online.

Attempts to search for her name without quote marks on the site Monday resulted in an error message and a prompt for users to retry their search, which added, “Don’t fret — it’s not your fault.”

However, putting quote marks around her name allowed posts to appear that mentioned her name.

Sexually explicit and abusive fake images of Swift began circulating widely last week on X, making her the most famous victim of a scourge that tech platforms and anti-abuse groups have struggled to fix.

“This is a temporary action and done with an abundance of caution as we prioritize safety on this issue,” Joe Benarroch, head of business operations at X, said in a statement.

After the images began spreading online, the singer’s devoted fanbase of Swifties quickly mobilized, launching a counteroffensive on X and a #ProtectTaylorSwift hashtag to flood it with more positive images of the pop star. Some said they were reporting accounts that were sharing the deepfakes.

The deepfake-detecting group Reality Defender said it tracked a deluge of nonconsensual pornographic material depicting Swift, particularly on X, formerly known as Twitter. Some images also made their way to Meta-owned Facebook and other social media platforms.

The researchers found at least a couple dozen unique AI-generated images. The most widely shared were football-related, showing a painted or bloodied Swift that objectified her and in some cases inflicted violent harm on her deepfake persona.

Researchers have said the number of explicit deepfakes have grown in the past few years, as the technology used to produce such images has become more accessible and easier to use.

In 2019, a report released by the AI firm DeepTrace Labs showed these images were overwhelmingly weaponized against women. Most of the victims, it said, were Hollywood actors and South Korean K-pop singers.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.