Faces created by AI are more trustworthy than real ones

Research flags up the difficulties in distinguishing synthetic faces from those of actual people

Artificial intelligence (AI) is now capable of creating plausible images of people, thanks to websites such as This person does not exist, as well as non-existent animals, and even rooms for rent.

The similarity between the mocked-up images and reality is such that it has been flagged up by research published in Proceedings of the National Academy of Sciences of the United States of America (PNAS), which compared real faces with those created through an AI algorithm known as generative adversarial networks (GANs).

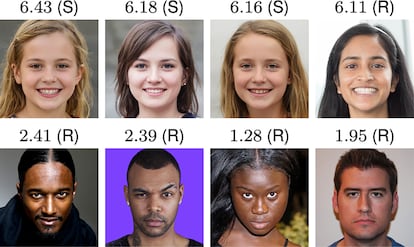

Not only does the research conclude that it was hard to distinguish between the two, it also shows that fictitious faces generate more trust than real ones. Although the difference is slight at just 7.7%, the faces that were considered least trustworthy were real while three of the four believed to be most trustworthy were fictitious. Sophie Nightingale, a professor of psychology at Lancaster University and co-author of the study, says the result wasn’t something the team were expecting: “We were quite surprised,” she says.

The research was based on three experiments. In the first experiment, the 315 participants who were asked to distinguish between real and synthesized faces scored an accuracy rate of 48.2%. In the second experiment, the 219 different participants were given tips on distinguishing synthetic faces from real ones with feedback on their guesses. The percentage of correct answers in the second experiment was slightly higher than in the first, with an accuracy rate of 59%. In both cases, the faces that were most difficult to classify were those of white people. Nightingale believes that this discrepancy is due to the fact that the algorithms are more used to this type of face, but that this will change with time

In the third experiment, the researchers wanted to go further and gauge the level of trust generated by the faces and check whether the synthetic ones triggered the same levels of confidence. To do this, 223 participants had to rate the faces from 1 (very untrustworthy) to 7 (very trustworthy). In the case of the real faces, the average score was 4.48 versus 4.82 for the synthetic faces. Although the difference amounts to just 7.7%, the authors stress that it is “significant.” Of the 4 most trustworthy faces, three were synthetic, while the four that struck the participants as least trustworthy were real.

All the faces analyzed belonged to a sample of 800 images, half real and half fake. The synthetic half was created by GANs. The sample was composed of an equal number of men and women of four different races: African American, Caucasian, East Asian and South Asian. They matched the actual faces with a synthetic one of a similar age, gender, race and general appearance. Each participant analyzed 128 faces.

José Miguel Fernández Dols, professor of social psychology at the Autonomous University of Madrid whose research focuses on facial expression, points out that not all faces have the same expression or posture and that this can “affect judgments.” The study takes into account the importance of facial expression and assumes that a smiling face is more likely to be rated more trustworthy. However, 65.5% of the real faces and 58.8% of the synthetic faces were smiling, so facial expression alone “cannot explain why synthetics are rated as more trustworthy.”

The researcher also considers the posture of three of the images rated less trustworthy to be critical; pushing the upper part of the face forward with respect to the mouth and protecting the neck, is a posture that frequently precedes aggression. “Synthetic faces are becoming more realistic and can easily generate more trust by playing with several factors: the typical nature of the face, features and posture,” says Fernández Dols.

The consequences of synthetic content

In addition to the creation of synthetic faces, Nightingale predicts that other types of artificially created content, such as videos and audios, are “on their way” to becoming indistinguishable from real content. “It is the democratization of access to this powerful technology that poses the most significant threat,” she says. “We also encourage reconsideration of the often-laissez-faire approach to the public and unrestricted releasing of code for anyone to incorporate into any application.”

To prevent the proliferation of non-consensual intimate images, fraud and disinformation campaigns, the researchers propose guidelines for the creation and distribution of synthesized images to protect the public from “deep fakes.”

But Sergio Escalera, a professor at the University of Barcelona (UAB) and member of the Computer Vision Center, a UAB research center, highlights the positive aspect of AI generated faces: “It is interesting to see how faces can be generated to transmit a friendly emotion,” he says, suggesting this could be incorporated into the creation of virtual assistants or used when a specific expression needs to be conveyed, such as calm, to people suffering from a mental illness.

According to Escalera, from an ethical point of view, it is important to expose AI’s potential and, above all, “to be very aware of the possible risks that may exist when handing that over to society.” He also points out that current legislation is “a little behind” technological progress and there is still “much to be done.”

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.