From fascination to fear of a hidden agenda: The year of ChatGPT will define the battle for the future of humanity

Widespread amazement at creative artificial intelligence has resulted in the fever of technocapitalism, which aims to monopolize profits and impose regulations that favor its interests over those of society

A single grimace can encompass a world.

In 2017, Lee Sedol — the world champion of Go — was finally defeated… but not by a human. He was beaten by a machine: AlphaGo, created by DeepMind, a subsidiary of Google. Sedol — the unbeatable master of an ancient game with more possible moves than there are atoms in the universe — was convinced that he would win 5-0. He ultimately lost 4-1. During the five games, all of humanity was reflected in Sedol’s face, when he observed a winning move made by the machine, as unexpected as it was fascinating. The champion was speechless. And, after a few seconds of shock, he smiled casually. Then he frowned, concentrating again on his intellectual endeavor.

His gesture summarizes what has happened this year throughout the planet following the emergence of ChatGPT and the rest of the creative artificial intelligence (AI) chatbots. We’ve all felt stunned and challenged. The AI that amazes us is suddenly speaking to us. Sedol realized that it was speaking to him with new words, but in a human language, through his game. That’s the key to everything that has happened: only when we’ve seen ourselves reflected in a talking machine can we take it seriously.

However, beneath the noise of the headlines, the seduction of the machines has been weaving a hidden agenda.

“The famous [Spanish] philosopher José Ortega y Gasset said: ‘To be surprised, to wonder, is to begin to understand,’” summarizes Sara Hooker, one of the most prominent researchers in the sector. After working at Google Brain, she founded Cohere For AI, a not-for-profit research laboratory. “[2023] has been the year of surprise and wonder, when groundbreaking advances in the artificial intelligence of language have reached the general public beyond the scientific world,” Hooker notes. “But this also marks the beginning of our ability to understand how to use this technology in a meaningful and responsible way.”

It’s a cycle, the researcher explains, that we’ve seen before, whether with the emergence of the internet or mobile phones. And now comes generative AI, which is capable of creating texts and images. “It takes time to discover the best uses and how to develop them. It doesn’t happen overnight,” the researcher cautions. She wrote a guiding document for the AI Safety Summit that was held at the Bletchley Park estate, in the United Kingdom. From that summit — organized by British Prime Minister Rishi Sunak — a declaration was released on November 1, in which thirty nations (such as the U.S., the U.K. and China) demand security and transparency from the AI sector.

Daniel Innerarity — a philosopher who chairs the department of AI & Democracy at the Florence School of Transnational Governance — believes that these advances have generated an environment that he dares to describe as “digital hysteria,” based on news “that arouses fears and exaggerated expectations.” The digital hysteria of the past 12 months was lit with the fire of two torches: the surprise that Hooker talks about, along with the greed of big tech. Alphabet (Google), Meta, Microsoft and other transnational firms want AI to guarantee their infinite growth and have each set out to conquer the playing field.

But that commercial revolution — and the political race to regulate it — requires the atomic fuel of human fascination. That impact has been the catalyst for a phenomenon that has captured much of the global conversation. It’s what led Sam Altman — the leader of OpenAI (the company that developed ChatGPT) until last week, when he was fired — to meet with leaders from around the planet… something unthinkable for an entrepreneur who, just a few months ago, was totally unknown.

“The key to success has been conversation,” opines neuroscientist Mariano Sigman. “The moment in which a machine began to generate ideas — to articulate language with surprising fluidity and subtlety — that was the great change. Thanks to this, there’s enormous awareness of the consequences that artificial intelligence entails, which would have gone unnoticed if it had remained in a much more exclusive sphere,” the researcher warns.

AI had already been revolutionizing sectors for years and achieving great successes, such as dethroning Sedol at Go, detecting tumors for radiologists, driving cars reasonably well, or studying proteins to open the door to countless medications. But all these past accomplishments are nothing like the irresistible magnetism that a conversational machine exerts on humans. According to a study carried out by a group of American universities, the OpenAI program shows more empathy than doctors when it comes to conveying a diagnosis. That ability to speak with sentiments caused global attention to turn towards AI. And it’s what has convinced world leaders that the problem — or the revolution — requires urgent action.

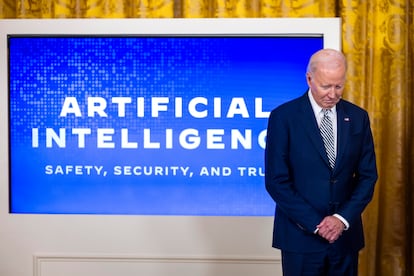

President Joe Biden has already published his legislative framework for the United States, while the European Union wants to have regulations ready by the end of this year. Meanwhile, various AI experts are writing articles on human consciousness as if they were philosophers, the fathers of this technological debate, or prophets of the apocalypse. They’re holding Byzantine discussions on social media, like priests delving into a mystery that’s inaccessible to others. But the future of humanity may indeed depend on the outcome of these debates… or at least these conversations will determine how we go forward with addressing the results of AI when it comes to the labor market, privacy, misinformation, culture and copyright law.

One of these experts is Jürgen Schmidhuber, director of the Institute of Artificial Intelligence at the University of Switzerland. He laid some of the first bricks when it came to these decisive large language models (LLMs). “The curious thing is that the basic principles behind generative AI are more than three decades old,” he informs EL PAÍS.

“Why did it take so long for all this to take off?” he asks rhetorically. “Because, in 1991, computing was millions of times more expensive than today. Back then, we could only do small experiments. That’s the main reason why — in the last decade — companies like Google, OpenAI, Microsoft or Samsung have been able to implement our techniques billions of times a day on billions of smartphones and computers around the world.”

At this moment, it’s difficult to discern what the future of AI — and artificial creativity, more specifically — will be. There could either be a bubble, or a revolution. Estimates say that the value of the industry could skyrocket to $200 billion by 2030. In the last year, however, only about $2.5 billion have been invested in generative AI, compared to around $65 billion for traditional AI — which flies airplanes or reads CVs — according to a report by the firm Menlo Ventures. “In 30 years, people will smirk at today’s applications, which will seem primitive compared to what [they have]. Civilization as we know it will be completely transformed and humanity will benefit greatly,” Schmidhuber predicts.

Two weeks in November

There were two weeks that changed everything forever. On November 15, 2022, Meta launched Galactica: a test version of an AI capable of creating language, aimed more at the creation of academic texts. It only lasted for three days — Meta pulled it after receiving harsh criticism for promoting biased and false information. Galactica was “hallucinating” — the term used for when these programs commit major errors.

On November 30, OpenAI released ChatGPT, which was also mind-blowing. But there were two important differences. Mark Zuckerberg’s company had a controversial past that weighed Galactica down, while Sam Altman was clever enough to have previously trained ChatGPT with people. After technological development, the machine learned by talking to flesh-and-blood tutors, who reinforced the most human responses. Galactica was left in a drawer, while ChatGPT became one of the most successful programs in history, achieving in six months the social impact that took Facebook an entire decade to build.

What happened during the last two weeks of November 2022 also radically changed the mentality among the big tech giants, who had been investing billions of dollars in AI for more than five years and emptying the computer science departments of universities by hiring every relevant scientist. In all that time, the laboratories of these companies focused on developments such as Galactica or AlphaGo, fascinating tests, notable scientific achievements… but few products with real commercial power. What’s more, Google was far ahead, but it didn’t dare to launch these products on the market, for fear of putting its search engine’s dominance at risk (if you have an intelligent chatbot, why would you query a search engine?). At least, this is according to what has been revealed through investigations into Google’s monopolistic practices.

This past January, Microsoft announced a $10 billion investment into OpenAI, causing alarm bells to go off at Google. The search titan quickly abandoned its initial doubts and called for an internal “code red” to incorporate generative AI into all its products, in addition to merging its two laboratories — DeepMind and Google Brain — into a single department with one mission: be less scientific and more productive.

From the experiments, the focus then switched to marketing. And, funnily enough, the same businesspeople who invested billions in these algorithms started warning about the risks to humanity and demanding immediate regulation. Altman assured the public that, in the event of an AI apocalypse, even having a bunker “wouldn’t help.” While thousands of girls were being subjected to the sexual harassment resulting from these technologies (such as deepfake pornography), the technocapitalist aristocracy raised the specter of Terminator — the film in which machines subjugate the human species. Biden, meanwhile, claimed that his interest in legislating AI accelerated after watching the latest Mission: Impossible movie, in which a rogue AI system puts world powers on the ropes. Politicians have decided to act as soon as possible, out of fear that what happened with Facebook’s disinformation years ago will happen to them, with manipulations of images and audio.

Hooker acknowledges that there are “significant risks” from AI, but she’s more concerned that they’re not being properly prioritized. “More theoretical — and, in my opinion, extremely unlikely scenarios, such as terminators taking over the world — have received a disproportionate amount of attention from society. I think this is a big mistake and that we should address the very real risks we currently face, such as deepfakes, misinformation, bias and cyber scams.”

According to many specialists, the catastrophic discourse suddenly coming from big tech firms is out of an interest in getting regulations implemented that prevent new competitors from emerging, as they would be burdened by a more stringent regulatory framework. The four leading companies in the race — Google, OpenAI, Microsoft and Anthropic — have even created their own lobby group to push their regulatory framework. The chief scientist at Meta, Yann LeCun, assures EL PAÍS that they only want to stop the arrival of new competitors and have the regulations tailored to their needs. “Altman, Demis Hassabis (Google DeepMind) and Dario Amodei (Anthropic) are the ones who are carrying out intense lobbying at this time,” LeCun notes. Andrew Ng — the former director of Google Brain — has been very vocal on the matter: “They would prefer not to have to compete with open source [AI], so they’re creating the fear that AI will lead to human extinction.”

More than half of university professors in this sector in the United States believe that corporate leaders are deceiving society in favor of their agendas. Even the OpenAI board dismissed Altman this past Friday — almost on the first anniversary of ChatGPT — implying that the CEO hadn’t been honest with the company. The journey of tycoon Elon Musk over the past few years is also very telling: he promoted the creation of OpenAI to combat Google’s opacity, but then wanted to control the company when he glimpsed its potential. He ended up departing after a dispute with Atman. Subsequently, after the global bombshell that was ChatGPT, Musk was among the first to call for a six-month moratorium on AI development. But in reality, he was working behind the scenes to create his own company — xAI — and his own smart program called Grok, which was launched on November 4, 2023.

“We run the risk of further entrenching the dominance of a few private actors over our economy and our social institutions,” warns Amba Kak, executive director of the AI Now Institute, one of the organizations that most closely monitors this field. It’s no coincidence that the great year of this technology has also been the year of the most secrecy: never before have the companies that develop AI shared less about their data, their work, or their funding. “As economic interests and security concerns increase, traditionally open companies have begun to be more secretive about their most innovative research,” laments the annual report on the sector, published by the venture capital firm Air Street Capital.

In their latest book — Power and Progress: Our Thousand-Year Struggle Over Technology and Prosperity — professors Daron Acemoglu (MIT) and Simon Johnson (Oxford) warn that history shows that new technologies don’t simply benefit the entire population naturally. Progress only occurs upon combatting the elites, who try to monopolize this progress. “The technology sector and big companies have much more political influence today than at any other time in the last 100 years. Despite their scandals, technology magnates are respected and influential… only rarely does anyone question the type of progress they’re imposing on the rest of society,” the authors warn. “Most people around the globe today are better off than our ancestors, because citizens and workers in earlier industrial societies organized, challenged elite-dominated choices about technology and work conditions, and forced ways of sharing the gains from technical improvements more equitably. Today, we need to do the same again.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.