How deepfake technology has spread across the world: ‘We can all be victims. The internet is a jungle’

AI faking has leapt from images to voice, text, documents, and facial recognition to become one of the most worrying emerging vectors of fraud

Deepfakes — hyper-realistic reproductions that artificial intelligence has taken to unprecedented limits of accessibility and dissemination — are everywhere, as evidenced by the case of the AI-generated nude photos of teenagers circulating in the Badajoz province of Spain this week. “We can all be victims. The internet is a jungle. There is no control and it is not a game: it is a crime,” says Maribel Rengel, the president of the Extremadura federation of parents of students, Freapa. The most cited report on the phenomenon, published by Deeptrace in 2019, traced up to half a million fake files on the internet, with a growth rate in the number of deepfake videos online of almost 100% since the company previously released data. But this is only the work of one entity, and the real volume is unclear. What is known is that 96% of deepfake videos are related to pornography and that these creations have now leapt from image to voice, text, documents, and facial recognition, or a combination of all of these, to become one of the most worrying emerging vectors of fraud in an as-yet unregulated technology.

The European Telecommunications Standards Institute (ETSI) warns in one of the most comprehensive and up-to-date reports of the dangers of the “increasingly easy” use of artificial intelligence in the creation of fake files. “Due to significant progress in applying AI to the problem of generating or modifying data represented in different media formats (in particular, audio, video and text), new threats have emerged that can lead to substantial risks in various settings ranging from personal defamation and opening bank accounts using false identities (by attacks on biometric authentication procedures) to campaigns for influencing public opinion,” the report states. These are the main targets of deepfake technology:

Pornography

Danielle Citron, a law professor at Boston University and author of the book Hate Crimes in Cyberspace, says: “Deepfake technology is being used as a weapon against women by inserting their faces into pornography. It is frightening, shaming, degrading, and silencing. Deepfake sex videos tell people that their bodies are not their own and can make it difficult for them to have online relationships, get or keep a job, or feel safe.”

Rengel shares this view in the wake of the case in Badajoz, which affected several high schools in her community. “The feeling is one of vulnerability and it is very difficult to control. The damage is irreparable. They can ruin a girl’s life,” she says. Rengel has urged the Spanish authorities to move to regulate the use of this technology.

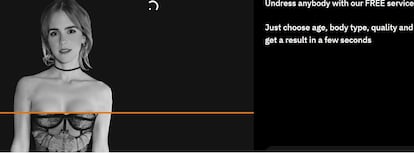

Deepfake attacks consist of the spreading of fake videos, images, audio, or text on social networks with the aim of ruining the reputation of the victim or humiliating them. The Deeptrace report estimated the number of video views of deepfake material across the top four dedicated websites at over 134 million. Celebrities are often the victims of these practices. Spanish Singer Rosalía is a recent example in a lengthy international list headed by actors Emma Watson and Natalie Portman.

However, as Rengel warns, “no one is safe” from deepfake technology. At the click of a button, on open platforms or encrypted messaging systems, you can find applications and pages that offer to “undress anyone” in seconds. These sites carry age warnings for minors and state that users cannot use photos of a person without their consent, although there is no moderation and responsibility is placed on the user. These sites also claim that their objective is “entertainment.”

The U.S. Federal Bureau of Investigation (FBI) has warned of an increase in cybercriminals using images and videos from social networks to create deepfakes to harass and extort victims.

As a matter of principle, I never post or link to fake or false content. But @MikaelThalen has helpfully whacked a label on this Zelensky one, so here goes.

— Shayan Sardarizadeh (@Shayan86) March 16, 2022

I've seen some well-made deepfakes. This, however, has to rank among the worst of all time.pic.twitter.com/6OTjGxT28a

Hoaxes to influence public opinion

In a foreword to the Deeptrace report, the company’s founder Giorgio Patrini notes: “Deepfakes are already destabilizing political processes. Without defensive countermeasures, the integrity of democracies around the world are at risk.”

The ETSI report identifies these attacks as false publications that create the impression that people in influential positions have written, said, or done certain things. “In principle, this applies to all purposes where stakes are high and the benefit justifies the effort from an attacker’s perspective,” the entity warns. These can be used to discredit a character, manipulate prices, attack competitors, influence public opinion in the run-up to elections or referendums, strengthen the reach of a disinformation campaign and as propaganda, particularly in times of war, ETSI notes.

In March 2022, a deepfake video of Ukrainian President Volodymyr Zelenskiy announcing his country’s surrender to Russia was released. U.S. Democratic politician Nancy Pelosi was the target of another, in which she appeared to be drunk, and public figures including Donald Trump, Barack Obama, Pope Francis and Elon Musk have all been the subjects of similar fake creations.

Attacks on authenticity

These explicitly target remote biometric identification and authentication procedures. Such procedures are widely used in many countries to give users access to digital services, including bank accounts, because they reduce costs and make it easier to purchase products.

In 2022, Europe’s largest hacking association, Chaos Computer Club, carried out successful attacks against video identification procedures by applying deepfake methods.

Not gonna lie… I love nerds 👨🎓 @Harvard @milesfisher #deeptomcruise pic.twitter.com/SpZP3CggGD

— Chris Umé (@vfxchrisume) April 29, 2022

Internet security

Many attacks rely on human error to gain access to corporate systems, but hyper-realistic fake files significantly increase the ability to do so by providing false data that is difficult to identify. This can involve writing in the style of the supposed sender, and voice and video files of people supposedly communicating with the victims of the attack. The goal is to lead victims to click on malicious links, which attackers can use to obtain login credentials or to install malware on devices.

In 2020, a bank manager in Hong Kong was duped by attackers who used AI voice cloning to imitate a senior executive to generate a transfer worth $35 million, Forbes reported. An energy company in the U.K. suffered a similar attack a year earlier that cost it $243,000.

Movies with fake actors and screenwriters

In the artistic area, this technology can be used to create cinematic content. The SAG-AFTRA union, which groups 160,000 workers in the entertainment industry, called a strike in Hollywood in July to demand — in addition to better pay — guarantees of protection against the use of artificial intelligence in productions.

Both actors and writers are demanding the regulation of technologies that can write a script or replace actors through the use of deepfake, which makes it possible to replicate a person’s physique, voice and movements. The generalization of these tools would allow large production companies to largely dispense with human beings in the creation of content.

British actor Stephen Fry, who narrates of the Harry Potter audiobooks in the UK, has denounced the use of his voice in a history documentary without his consent. “They used my reading of the seven volumes of the Harry Potter books, and from that dataset an AI of my voice was created and it made that new narration. “It could have me read anything from a call to storm parliament to hard porn, all without my knowledge and without my permission,” he said.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.