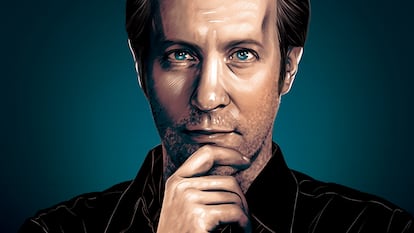

David Eagleman, investigator of the secrets of our minds

The U.S. neuroscientist, one of the most interesting scientific writers of our times, explains that each time we learn something, our neurons change. And that if someone loses their sight, part of the cells once in charge of seeing will begin to help them in another task, like listening

Nothing of the mind is foreign to David Eagleman, neuroscientist, technologist, entrepreneur and one of the most interesting scientific writers of our time. Born in New Mexico 52 years ago, he now researches cerebral plasticity, synesthesia, perception of time and what he called neurolaw, the intersection of the brain’s knowledge and its legal implications. His 2011 book Incognito: The Secret Lives of the Brain has been translated into 28 languages, and he returned to publishing with Livewired: The Inside Story of the Ever-Changing Brain, which focuses on a fundamental idea for today’s neuroscience: that the brain is constantly changing to be able to adapt to experience and learning. The science he brings to us isn’t merely top-notch, but firsthand, and his brilliant, crystal-clear writing — a perfect reflection of his mind — turns one of the most complex subjects of modern-day research into food for thought for the interested reader. We spoke with him in California by videoconference, the first interview that he’s given to a Spanish publication in a decade.

Could a newborn brain learn to live in a five-dimensional word? “We don’t actually know which things are pre-programmed and how much is experiential in our brains,” he replies. “If you could raise a baby in a five-dimensional world, which, of course, is unethical to do as an experiment, you might find that it’s perfectly able to function in that world. The general story of brain plasticity is that everything is more surprising than we thought, in terms of the brain’s ability to learn whatever world it drops into.”

Eagleman pulls out a sizable bowl of salad from somewhere, scoops a forkful into his mouth and continues his argument: “The five-dimensional world is hypothetical, but what we do see, of course, is that babies dropping into very different cultures around the planet, whether that’s a hyper-religious culture or an atheist culture, whether it’s a culture that lives on agriculture or a culture that is super technically advanced like here in Silicon Valley, the brain has no problem adjusting. My kids, when they were very young, could operate an iPad or cell phone just as easily as somebody growing up in a different place would operate farming equipment. So, we do know that brains are extremely flexible.”

The difference between genetics and experience, or between nature and nurture, isn’t so clear as it might seem. The usual thinking is that genes build the brain and then environment takes over by modifying the strength of connections between neurons (synapses) or by establishing new contacts. But forming new connections and modulating old ones requires reactivating the same genes that built the brain in the first place. I pose this issue to him.

“The way to think about the biology of the mind is that experience means there are changes at every level, so your intuition is exactly right, which genes are getting expressed and not expressed is part of the plasticity story. Often, the plasticity story just gets looked at from the point of view of the connections between neurons and the synapses that strengthen or weaken. The only reason we even think that is because it’s easy to measure. All of it is changing all the time, there’s no meaningful distinction between what’s happening at the level of the genome or what’s happening the level of the synapse, those are just arbitrary lines that we humans have drawn,” he responds.

A century of neuroscience has established the that cortex (or cerebral cortex), the outer layer that gives the brain its wrinkled appearance, is divided into hundreds of specialized areas: seeing, hearing, speaking, projecting, managing emotions, and all the rest. Yet, anatomists have found no major differences between the circuit architecture of one area and another, nor are there any known genes specific to each area. What does this mean? One of the lessons from Eagleman’s new book is that the brain is the same all over. “The cortex uses one trick, the same circuitry everywhere. The only reason that we see distinctions, like, that is responding to visual stuff and that’s responding to auditory, is because different input cables are plugging in there,” says the neuroscientist.

For example, information from the eyes enters through the optic nerve to the back of the brain, and so, that area becomes what we call the visual cortex. But if you go blind, that same cortex becomes auditory, tactile or serves another purpose. Thus, there’s nothing fundamental about the compartmentalization of the brain, Eagleman explains: it’s just a matter of what input wires are plugged into one area or another, i.e., what kind of information it receives.

Mysterious evolution

The evolution of the brain is as mysterious as its functioning. The six million years that separate us from chimpanzees are just a blink of the eye on the scale of evolution, and some scientists believe that the key lies in the sheer increase in the size of our cortex, which has tripled in size compared to chimpanzees and australopithecines. Eagleman is one of them. “Our cortex is so much larger than any of our neighbors, and that is a big part of the magical change that happened. We have other things too, like a good larynx that allows us to communicate rapidly with spoken language, and of course, we have an opposable thumb and that really helps as well. But the main thing is the size of the cortex.”

The scientist continues: “Part of what that did, by the way, is it put more real estate in between input and output, so when you see some sensory information and you have to make a response, in most animals those two areas are very close together. With us, they’re more separated. As a result, when you see something, you can make other sorts of decisions. Like, you put some food in front of me, but I’m on a diet or I don’t want to eat this right now because I’m doing intermittent fasting or whatever.”

Eagleman teaches neuroscience at Stanford University in California, but his work as a researcher and teacher isn’t enough to keep his inquiring mind busy all the time. He is the chief executive of Neosensory, a company he helped to found that is dedicated to developing technology that helps the Deaf and Blind to regain some of their faculties by recruiting areas of the brain normally devoted to other functions, in order to replace the lost sense. He is also the chief scientist at BrainCheck, a digital platform that helps doctors to diagnose cognitive problems.

In addition to having written a number of highly informative books, he writes and hosts the television series The Brain with David Eagleman and the podcast Inner Cosmos with David Eagleman. If there is a connecting thread that runs through all this frenetic activity, it is the harnessing of the brain’s knowledge to help medicine in innovative and creative ways.

“Consciousness is the great unsolved mystery of neuroscience. Some people think it’s just algorithms.”

My next question was inevitable. And Eagleman’s response was yes, he has indeed used ChatGPT. He says that the most fascinating part of large language models (LLM, the type of system formed by ChatGPT) is that at the moment, we are in a time more characterized by discovery than invention. When it comes to the majority of the inventions of the past — washing machines, coffeemakers — we know exactly how they work, given that we thought them up, he says. But these LLMs are full of surprises and do things no one expected, not even their programmers. “It’s amazing. I think a few things. One is that they’re really, really good at connecting data that you didn’t think of, because they’ve read everything in the world and they have perfect memory. They can connect things, if you happen to ask the right question.”

Eagleman thinks that this ability of ChatGPT is extremely valuable to science. “There are something like 30,000 new papers that come out every month, and I can’t possibly read all that, but it can. I published a paper recently in which I suggest that what I call level one scientific discovery is where it puts things together that I simply didn’t know. But that’s different from level two scientific discovery, which requires imagining a model that doesn’t exist.”

“When Albert Einstein thought about light,” Eagleman continues, “he thought, what would it be like if I were writing on top of a photon? How would I see the world? By doing that, he ended up deriving the special theory of relativity. Now, what he was doing was not just putting together things that were already in the literature; he was thinking of a new model and running that simulation. That’s what I’m not sure, at the moment, that AI can do. That’s why I think we scientists still have a job at the moment.”

But Eagleman is also a writer. And he also believes that his other profession will endure in the era of AI, because, while ChatGPT can write “surprisingly cool answers” to a diverse array of questions, as a writer, it’s not particularly creative. “Everything that you or I might do — structure and write a paragraph and have a call back and do something really clever and interesting — at least at the moment, it doesn’t do anything nearly that good. So, at the moment, I’m not worried as a writer.”

But, I said, AI can copy him. “You are a master of metaphors and analogies. But if ChatGPT kind of system could copy your way of doing that, do you think your work would be in danger, then?” I asked.

“You’re a writer too, you know writing is hard,” he responds, courteously. “And if in 10 years, ChatGPT could sort of write a good book, that would be amazing. But I’m not convinced that it can, because to write a good book requires putting together new ideas and new models, new ways of thinking, and then saying, ‘OK, what story would I tell to start this chapter to introduce this concept? And then, what concept do I introduce next and next?’ When I’m writing a book, I’m thinking of all these levels all at the same time, about what the experience is for the reader and how to do what is called a callback, where you go back to something that you had before. I’m thinking about all that the way you would compose a big symphony, for example. And I think at the moment, because ChatGPT or these LLMs are just thinking about which word comes next, they can’t do that. It can’t think on all these levels at the same time.”

Eagleman explains that what he finds it difficult to digest when it comes to ChatGPT is that “it has read every single book that has ever existed, every blog, every web page, and it remembers all of that. What this illustrates is that fundamentally, we are sort of statistical machines also. And if you just copy the statistics of that and know what things went with what in every single thing that’s ever been written by humans, it turns out that does a lot better than we would have ever imagined.”

Mind/machine

One of the areas that Eagleman researches are mind/machine interfaces, small electrode panels that are implanted in the brain to help Blind or Deaf people. How do they make sure these electrodes connect to the correct neurons?

“You know the area that you’re putting it in. So, for example, if I want someone to control a robotic arm, I’m putting it in the area that controls the arm normally. It’s important to note that first of all, it’s a big collection of neurons. Let’s say you’re moving your arm, but if I tied a little weight to your wrist, it wouldn’t take you long to figure out how to move your wrist to grab the coffee appropriately. Your brain is used to always having your body change. Maybe I’m holding a bunch of stuff, and I have to open the door a different way, or whatever. The brain is super used to doing this. That’s why it doesn’t matter the exact 100 neurons that you’re measuring from. The brain will just figure it out. Like, OK, I want to control this robotic arm, and it just figures out how to move.”

Will machines achieve a form of consciousness? “Everyone has an opinion on this, but we don’t really know. The central unsolved mystery in neuroscience is consciousness. One idea is the computational hypothesis, which has to do with algorithms, and if you replicate those algorithms in silicon, then you get consciousness. But another school of thought says, look, maybe it’s something special that you’re getting with these biological systems, something that we haven’t even discovered yet.”

It’s amazing what a salad can do.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.