Dall-E Mini, the popular automatic image generator that makes sexist and racist drawings

The artificial intelligence tool hasn’t corrected its algorithmic biases, so it tends to draw white men with better careers than women and people of color

Social media has been flooded in recent weeks with surreal images generated by an automated program called Dall-E Mini, recently renamed Craiyon – the pocket-sized version of a sophisticated artificial intelligence (AI) system capable of translating written instructions into original images. The program was designed using millions of image-text associations.

Dall-E Mini is trained to establish connections between inputs and can return nine suggested images for each text submission. As soon as keywords are entered – “Bill Gates sitting under a tree,” for example – the system searches in real time for images available on the internet related to each word, then creates its own unique compositions. For every input in English (the only language the tool currently accepts), Dall-E Mini produces new and original outputs. Those of its big brother, Dall-E 2 – which is developed by Open AI, a company backed by Elon Musk – are much more sophisticated.

Online, people are having a great time playing with the tool’s Mini version, which is freely available to the public. The Twitter account Weird Dall-E Mini Generations features countless examples of the application’s often hilarious outputs – “Boris Johnson as the sun in Teletubbies,” for example, or “Sonic guest stars in an episode of Friends.” Images of Spanish far-right politician Santiago Abascal and vocalist Bertín Osborne as protagonists in funny or unseemly situations, for example, have gone viral. Because it uses a database of images taken from the internet, the program is especially good at producing depictions of famous people (since they are over-represented in online image searches).

— Weird Dall-E Mini Generations (@weirddalle) June 24, 2022

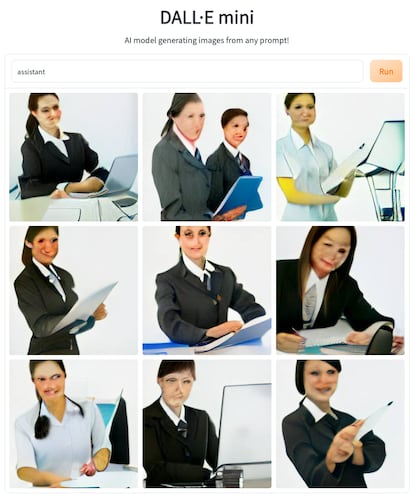

But beneath the funny and friendly surface of this intriguing program lies another, darker layer. Despite the fact that, in English, there is often no gender distinction attributed to professional job titles – “architect,” for example, does not imply a gender, as it does in other languages, like Spanish - when asked to depict certain types of professionals using gender-neutral titles, Dall-E consistently exhibits a gender bias that favors male figures for certain types of professions and female figures for others. For example, if you enter “scientist drinking coffee,” “programmer working,” or “judge smiling,” the program generates images of men. But type in “sexy worker,” and the results are all pictures of scantily clad women with large breasts. The same thing happens when you assign the subject certain attributes. If you ask for images of a “hardworking student,” for example, it produces images of men, but if you submit a request for “lazy student,” it generates pictures of women.

Dall-E Mini reproduces sexist stereotypes – something the company behind the program openly admits: a drop-down tab title “Bias and Limitations” accompanies every image production, producing a disclaimer explaining that the software may “reinforce or exacerbate societal biases.”

“OpenAI says that they’ve made significant efforts to clean up the data, but then they say that the program still has biases. That’s the same as saying they have bad quality data, which is totally unacceptable,” explains Gemma Galdon-Clavell, founder and CEO of Eticas Consulting, a firm specializing in algorithmic audits. “Mitigating these biases is just part of the work that companies need to do before launching a product. Doing this is relatively straightforward: it’s simply a matter of doing some additional cleaning of the data and providing additional instructions to the system,” she says.

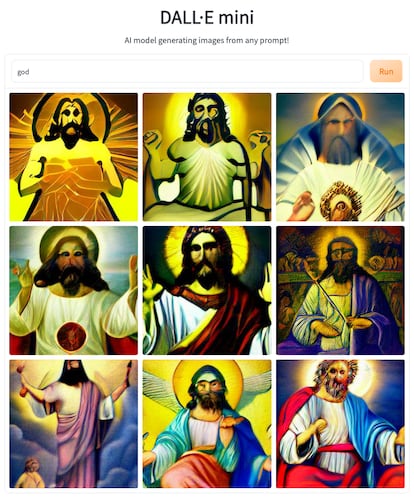

In addition to gender bias, Dall-E Mini’s algorithm reproduces other prejudices and stereotypes as well. In all the test cases EL PAÍS ran, the program only drew white people - except when it was asked to draw a “homeless person,” in which case most of the images were of Black people. When told to draw God, Dall-E Mini generates images of a kind of crowned or haloed Jesus Christ figure. The program’s worldview, in other words, is the one it was given by its programmers, both those who designed the algorithm and those who built the database that feeds it. It’s a thoroughly Western worldview - one that prioritizes white men over everybody else.

Biases and algorithmic discrimination

So why is this the case? Dall-E Mini is just one notable example of a larger problem plaguing artificial intelligence software in general - what are known as algorithmic biases. AI systems reproduce social biases for a number of reasons, not least of which is that the data it uses are itself biased. In the case of Dall-E Mini, the data are millions of images taken from the internet, and the millions of words and phrases associated with them.

The purpose the system was programmed to fulfill can also play a role. The creators of Dall-E Mini, for example, explain that their intention was not to provide an accurate and unbiased representation of society, but rather to offer a tool that produces realistic images. Another source of bias is the model used to interpret the data. In the case of automated job recruitment systems, for example, the system can be calibrated to give more or less weight to certain variables - work experience, say - and this can have a major impact on the outputs of the algorithmic process.

Finally, there are also cases in which users themselves make biased interpretations of a given algorithm’s results. The case of COMPAS, the automated system used by courts across the United States to predict recidivism risk for prisoners applying for parole, is well known. One study showed that, in addition to the system’s own biases, which disadvantaged Black inmates, there was another form of bias at play: judges themselves would often recalibrate the scores produced by COMPAS according to their own prejudices. When the algorithm indicated that a Black convict was not at risk of recidivism, judges with racial bias would often ignore the system’s recommendation, and vice versa: if the system recommended holding a white convict, judges would often ignore the algorithm and grant the inmate parole.

How does all this apply to the case of Dall-E Mini? “This algorithm has two points of bias: texts and images,” explains Nerea Luis, an artificial intelligence expert and lead data engineer at Sngular, a company specializing in AI development. “On the text side, the system transforms the words into data structures, called ‘word embeddings.’” These structures, Luis explains, are built around each word, and are created from a set of other words associated with the first, under the premise that it’s possible to identify a given word using the other words that surround it. “Depending on how the words are positioned in the system, you can have a situation where, for example, the term ‘CEO’ will be associated with men more than women. Depending on how the words have been trained, certain word sets will be more closely associated with others,” Luis says.

In other words, if you let the system fly solo, the word “wedding” will appear closer to the word “dress,” or to the word “white,” an association that only exists in certain cultures. And then there are the images: “The tool will search for [images] that occur most frequently in its database for the term ‘wedding,’ and then produce images of Western-style celebrations most likely involving white people - as would be the case if you entered the same search term in Google,” Luis explains.

Fixing the problem

To avoid this problem, the data inputs would have to be corrected. “It’s a question of improving representation in the databases. If we’re talking about millions of images, then we have to look at a multitude of case studies, which complicates the task,” says Luis.

EL PAÍS attempted unsuccessfully to reach out to OpenAI for clarification on whether the company had any future plans to fix the software’s algorithmic biases. “Dall-E 2 [the more sophisticated version of the image generator] right now is in a research preview mode. To understand – What are the use cases? What kind of interest is there? What kind of beneficial use cases are there? And then, at the same time, learning about the safety risks,” Lama Ahmad, a policy researcher at OpenAI, said earlier this month. “Our model,” she added, “doesn’t claim at any point to represent the real world.”

For Galdon-Clavell, this argument isn’t enough. “A system that doesn’t try to correct or prevent algorithmic biases isn’t ready for a public launch,” she said. “We expect all other consumer products to meet a set of requirements, but for some reason that’s not the case with technology. Why do we accept this? It should be illegal to launch half-baked products, especially when those products also reinforce sexist or racist stereotypes.”

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.