Brains connected to the computer: will it be the end of humans’ ability to decide?

The possibility of connecting the mind to a computer is getting closer. The scenarios that open up range from hope to hypervigilance

Two future scenarios await mankind. One is pleasant and the other is disturbing. First the pleasant one: you can turn on the television using just your mind, you are able to communicate your thoughts to another person without the need to speak or write, learning new skills takes a fraction of a second, and you can remember everything with crystal clarity. Now the disturbing part: a machine can predict your decisions, third parties can access your most intimate thoughts, you live in a state of perpetual surveillance, and your perceptions and memories can be manipulated.

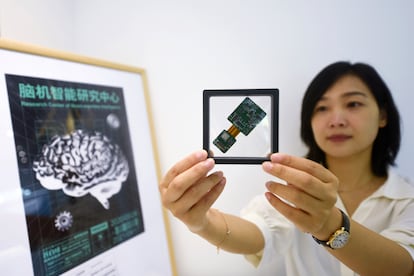

These are some of the situations that could become a reality with the advancement of neurotechnological devices such as brain-computer interfaces (BCI), technology that connects the human mind with a computer. Although it may seem like the plot from a science fiction movie, the version 1.0 of these interfaces could be available on the market in less than five years. In the clinical setting, it has already been possible to decode the thoughts of a paralyzed woman who is unable to speak and translate her brain activity into words. In another experiment, a person’s memory of a video they had just watched was displayed on a monitor with impressive fidelity.

In Hegel and the Connected Brain, philosopher Slavoj Žižek speculates about how connecting the brain to computers would change our understanding of thought, freedom, and individuality. For example, BCIs could revolutionize communication by eliminating the language barrier and allowing the instantaneous and accurate transmission of thoughts between people. From speaking to someone we would go on to think with someone. Such a level of transparency would erode the distinction between the “self” and the “other.” By fully sharing our subjective experiences, we would face a paradox: on the one hand, an increase in empathy and mutual understanding, and on the other, a possible loss of the personal uniqueness that defines us as individuals.

Most disturbing, according to Žižek, is the possibility of a state of hypervigilance where brain activity is constantly monitored and recorded. He argues that this could lead to a pre-criminal state, where authorities would act before crimes are committed, in a similar way to those in the film Minority Report. Žižek suggests that “the ultimate goal of digitally recording our actions is to predict and prevent transgressions.” This would have a dramatic impact on individual freedom, compromising our ability to make decisions for ourselves. “It is not that the computer that records our activity is omnipotent and infallible,” he writes, “but that its decisions are usually, on average, better than ours.”

Right now, Chile and Brazil are the only two countries in the world that have implemented legislative measures to protect brain information. Neuroscientist Rafael Yuste, professor at Columbia University, highlights by video call the critical importance of establishing “neurorights” to preserve privacy and mental identity in the face of the advance of neurotechnology. “We want to prevent what has happened with other disruptive technologies such as the internet, the metaverse, or artificial intelligence. These had no regulation until society realized the negative consequences, and it is already too late.” And he adds: “It is often said that when a new technology emerges, you don’t really know what it is for, but it is very easy to regulate it. And then, once it is implanted, you know perfectly well what it is for, but it is impossible to put it back in the drawer.”

There are two types of BCI: invasive and non-invasive. Both have the ability to measure brain activity and modify it. The former require implantation within the skull, although not necessarily in the brain tissue. They are commonly known as brain chips, and are being developed by companies such as Elon Musk’s Neuralink. With this type, Yuste indicates, there is no type of concern, since they are rigorously regulated, and any information that is generated is protected by medical privacy legislation.

The problem comes with the second, non-invasive type (such as headbands or helmets), which is sold as a common electronic product without a specific regulatory framework. “Users sign contracts with companies, and clauses in the small print that no one reads give them full control over brain data, allowing them to sell this information without additional consent,” explains Yuste. “And if you want to access or recover your own brain data, some companies even charge you money for it.” Neurodata, that is, the information that comes from the activity of our brain, is very delicate and even more private than any other type of data. “It is much more serious than losing privacy on your mobile because the brain controls everything we are. Neurotechnology reaches the essence of the human being. We could lose everything with this.”

The inequality gap

Another concern raised by experts is the inequality gap that could be generated between those who have access to this technology and those who do not. Right now, anyone can buy a brain stimulation device for less than $1,000. These can allegedly lead to cognitive improvements in, say, memory. “On Amazon they sell like hotcakes,” says neuroscientist Álvaro Pascual-Leone, a specialist in this type of technology. “In the United States, there are communities related to the DIY culture that publish detailed instructions on forums such as Reddit on how to build these brain self-stimulation devices at home.” Pascual-Leone warns of the dilemmas that arise when extending these therapies beyond the clinical context. “It is necessary to define who will determine which capabilities should be enhanced and which should not, as well as who will have access to these improvements. “The logical thing would be for these technologies to be applied first in the context in which there is a medical need.”

The philosopher Carlos Blanco, author of the book The frontiers of thought, admits that nothing would fascinate him more than seeing an intelligence superior to that of humans, but he sees it as a still very, very remote possibility. “We already have the ability to accumulate and process more and more information using a computer. The leap should also be qualitative: it understands things that we cannot understand, it thinks beyond the paradigms that we have developed. With a greater capacity for abstraction and understanding,” Blanco explains in a phone call. For him, moving forward means “expanding the limits of what is thinkable” and “thinking what has not yet been thought.” He argues that any development of artificial or posthuman intelligence must include strong control mechanisms as an imperative. Despite the concerns, he remains optimistic about the emergence of a posthuman intelligence: “If you truly understand reality more deeply, you will probably not seek to use your power to oppress but to create and to build.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.