Robots that feel what they touch

Touch is central to the relationship between humans and their surroundings. This also applies to robots and their creators. A European project spearheaded by the University of Bologna is teaching machines to distinguish between what they touch in order to apply the appropriate degree of force

Many years ago, the famous anthropologist Ashley Montagu wrote that “the communication we transmit through touch is the most powerful means of establishing human relationships.” Yet here they are, in early May 2023: a group of researchers at the University of Bologna striving to deliver the sense of touch to a machine. With the aim of doing so, they are employing two technologies to endow a robotic hand with two different types of sensitivity. The first one, which is a little coarser, will cover most of the surface of the palm, and the second one will provide much richer and more complete information about the firmness, roughness or smoothness of objects. The latter is transmitted by the fingertips and the apparatus will be located precisely in this area.

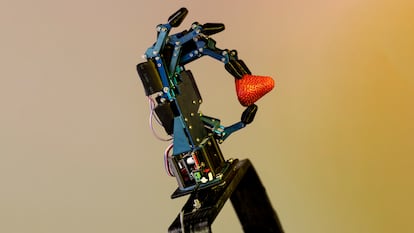

Today, in the Robotics Laboratory at the Faculty of Engineering, predoctoral researcher Alessandra Bernardini not only works with immense patience to explain to visitors how it all operates — small hemispherical sensors are attached, made of a malleable rubber-like material, which feed the computer information on the properties of the object it is holding — but she is also willing to relinquish two of the strawberries she brought with her for lunch to accompany the demonstration. As the robotic hand squeezes the red fruit, phototransmitters in the sensors transform the disturbances in the rubber into data that are displayed on the screen as line graphs that move together and separately.

Alongside her, Roberto Meattini, another member of the team, continues with the demonstration. After a rectangular structure is securely attached to his right wrist — at one end there is a hole to insert the hand and at the opposite end the robotic prototype is connected — he begins to slowly retract and extend his fingers. With the help of Bernardini at the controls of the computer, he is teaching the prosthesis to read the movements of the forearm muscles that direct the movements of his hand, so that it can then repeat them. A wristband positioned a little below the elbow collects and transmits this information to the robot via wireless sensors.

The two engineers, accompanied by half a dozen other colleagues and under the leadership of Professor Gianluca Palli, are merely taking the first steps in the IntelliMan project. This project, formed by a consortium of 13 universities, companies and research centers from six European countries, has the objective of developing a new artificial intelligence-driven manipulation system that enables robots (whether they are prostheses like the hand shown above or independent machines) to learn both from the surroundings and from their interaction with people. The European Commission has chosen the project from among the top 42 artificial intelligence and robotics initiatives launched as part of the Horizon Europe program, the continent’s flagship research and innovation plan. They have been selected for their potential to “improve the society we live in by addressing relevant technological challenges,” according to the document which compiles the initiatives. Among those chosen are proposals ranging from a large center that will attempt to ensure safety in artificial intelligence research to ideas for water reclamation, recycling or the development of drones to support field workers and infrastructure maintenance employees in dangerous locations.

Regarding IntelliMan, Professor Palli explains in his office in the historic Italian city what benefits they will try to obtain from the €4.5 million ($4.8 million) they will be receiving from the Commission between September 2022 and February 2026. First of all, their goal is to create prosthetic limbs that enable their owners to easily perform everyday activities, such as holding a glass or opening a door or a drawer. The next step will be to transfer these features to a self-reliant machine that could soon become a robotic home assistant that puts the dishwasher on by itself or clears the table after a meal. The idea is for them to be able to interact with people, but nobody should expect to be able to chat with them yet. The goal is to teach them new tasks — showing them how to do something once, so that they can repeat it afterwards — but also to make them capable of adapting that acquired work to a changing context. For example, an obstacle on the path that wasn’t there before, like an object that is more slippery than the previous one.

For this to happen, they require the sense of sight — which can be resolved with cameras and sensors — and the more challenging sense of touch, which they are working on. But this is not only to provide the machine with it, but also, in the case of prostheses, to allow the person using it to feel it too, in some way: “For instance, we are working on the transmission of grip force by means of vibrotactile motors, which are small motors that vibrate at different frequency amplitude [depending on if that force is higher or lower],” explains Meattini, back in the lab. “It’s something that even the most advanced prostheses on the market can’t yet do,” he adds.

The researchers work in a space filled with computers and modern prototypes of state-of-the-art machinery, but there are also numerous cables, cardboard, a few rolls of insulating tape and a large metal cabinet from which the engineers pull out all sorts of large, medium and small tools. Alongside this world of ultra-modern, white and aseptic technology, of harmonious and rounded shapes that preside over our collective imagination, this laboratory serves as a reminder that progress — at least some of it — still occurs in places cluttered with plugs and pinching angles, with objects that have to be assembled and tested over and over again to figure out how to make them work. These are places where students, doctoral students, budding researchers and seasoned researchers mingle, working on simultaneous projects that converge and sometimes overlap. Perhaps precisely because of all this, it is essential to break down each objective into smaller tasks to ensure they are manageable and to advance step by step, but steadily, towards the final result.

Right now, for IntelliMan, the engineers who comprise the Bolognese group are focused on trying to understand how a robotic grip works: “First you have to reach the object, then establish contact and understand the context surrounding it in order to know whether or not you can increase the grip force,” Meattini explains. This can be done in several ways, the most common of which is to create mathematical models that outline how the process can be undertaken and repeated without failure. “The problem with this is that, in theory, it works flawlessly, but in real life you don’t know exactly where it’s going to touch, how it’s going to do it. That’s why we’re working on a different approach. It is artificial intelligence based on probabilities, so that we can take the sensor measurements and map them into our probabilistic model and let it tell us what the grip should be like. The real world is probabilistic, it’s not like a formula,” adds the researcher.

This real world — with its failures, its unforeseen pitfalls and its unusual solutions — plays an important role in this research. It takes the form of factory workers sharing the hidden secrets of their everyday tasks and amputees telling the scientists exactly what they need from a prosthesis to make it truly useful and not hinder them (the specialized rehabilitation center operated by the Italian government’s National Institute for Accident Prevention near Bologna, in Budrio, belongs to the consortium). But the idea of seeking different approaches to old problems is also very much present — indeed, it is at the basis of all this collective effort. For instance, not only does the machine sense what is going on around it, but above all, it knows what to do next with this information. This is something they are trying to solve “by combining two approaches that are now fully established, but whose connection we are still investigating,” Palli points out. And he lingers on the explanation, because it is one of the great particularities of his project.

On the one hand, there are the more classical approaches, which consist of mapping and preconfiguring all the activity that the robot will perform in time and space, in other words, that it will pick up a specific object at one point and leave it at another. “It is difficult to put these solutions into practice if you do not have the full information of the context in which you are operating,” he points out. This is simply impossible given the unpredictability of everyday life and interaction with people. Which brings us to the second possible approach, the crucial one of machine learning and artificial intelligence: collecting a vast amount of context information that is fed to the machine through millions of examples so that it learns how something is done. This also carries a major limitation, according to Palli: it requires such a wealth of evidence, such a wealth of data, that it may be possible to compile if you are researching, for example, in the field of economics, where there are countless references available. But for a robotic application like the one they envisage, it does not exist and is not within reach, since collecting this information is too expensive: it requires time, equipment, and so on.

“In a way, we are attempting to define the robot’s blueprint and, on the basis of very few experiments, to collect enough data to adapt the blueprint to the true conditions of the environment. But we also want the human being, the person, to be actively present in this environment, to interact with it, and to educate it as well,” summarizes the project coordinator.

The 46-year-old Palli speaks with the assurance of someone who has spent several decades pursuing solutions to create more complete and independent robots. He does so from an institution that was a pioneer in this field, namely in the development of anthropomorphic robotic hands that would become increasingly reliable, simple and inexpensive. Its history can be reviewed through the prototypes displayed in several display cases in the university’s robotics laboratory. From the first one, funded in 1988 by IBM Italy — a rudimentary device with two parallel fingers and an opposing thumb controlled by artificial muscles, tendons driven by various motors and a system of calculators and electronic equipment — to one of the most recent ones: a robotic hand, moved by a sophisticated arm with a braided rope tendon transmission, with which researchers have started to take the first steps towards the introduction of tactile sensors. On top of this previous knowledge base — thanks to everything that was achieved, but also due to everything that was ruled out along the way — we are now heading into a new generation.

However, the project headed by Palli goes far beyond the robotic hand and prostheses as a whole. They are not only working on domestic robots, but also on applications for different industries. On the one hand, they are collaborating with the U.K. online supermarket chain Ocado on a robot capable of removing fruit from a large box to place it in smaller ones. For this purpose, it is necessary to be able to change the gripping force depending on whether the robot is handling apples, oranges or strawberries. In addition, they are tackling something even more difficult for the automotive industry (the Slovenian components company Elvez is another partner in the consortium): assembling cables and connectors, namely deformable materials whose handling calls for considerable dexterity.

They’re moving towards this. And the next breakthrough will hinge on breaking down barriers in many fields of science. These range from mathematics — to best represent the robots’ pre-assigned plan and how it perceives its surroundings — to parallel technological developments — touch sensors are still in the process of advancement and improvement. Yet Palli is particularly concerned about how the interaction between machine and human might play out, and for this purpose the project is supported by a team of psychologists.

The role that touch will assume in this path will greatly depend on its development, which is still very rudimentary at the moment. Nevertheless, we should not forget the words of the American British anthropologist Ashley Montagu on the power of this sense, maybe the most complex of all, for human communication. In his classic book Touching: The Human Significance of the Skin, the Princeton University professor who passed away in 1999 also made such claims as these: “In the evolution of the senses, touch was undoubtedly the first to exist. Touch is the parent of our eyes, ears, nose and mouth. [...] as Bertrand Russell pointed out some time ago, it is the sense that gives us a sense of reality. Not only our geometry and our physics, but all conception of what exists beyond us is based on the sense of touch.”

“I agree that touch will become increasingly important, because it’s what totally changes the game when you interact with objects and the surroundings. I think it will really unlock the ability of robots to interact with the environment in an effective way,” believes Palli. However, for the time being, what he and his team are proposing to ensure that the relationship between humans and machines functions well is a kind of shared autonomy. In this way, the person who begins to interact with the machine treats it like a child who has to be taught and slowly given more leeway to make their own decisions. “That means that the robot starts from a point where it is fully dependent on the human, because it doesn’t know how to do things. The human will teach it what to do, which gives the robot more autonomy, because it will be able to do this independently from now on,” explains the researcher. This process will also build the person’s trust in the robot.

When Roberto Meattini is asked in a social setting what he does for a living, he usually opts for the short version: “I work on robot-human interaction; I study how to connect humans with robots and vice versa.” Because he insists that it is not about creating robots, but about this relationship, which essentially underpins the theoretical foundations of the main disciplines of automatic systems engineering with which they work. In the engineer’s doctoral thesis, he referred to the toy robot that his parents bought him on his fifth birthday. He is also convinced of the importance of touch on the road to improvement: “The ability to sense and respond to touch will empower robots to not only accomplish manipulation tasks and autonomous operations better, but also to understand human intentions and serve human needs, thereby leading to more intuitive and effective interactions.”

This certainly sounds good. But at this point, even though we are talking about robots with basic abilities at first glance, it is inevitable to confront the fears that are increasingly surfacing about the potential unintended consequences of the development of artificial intelligence. After all, these are robots that are capable of learning new things. “We apply ethical principles to all of our research,” Palli says when asked the question. But do they envision the idea of transmitting some sort of ethics to the robots? “No, not in principle, we are not working on that. We are working on safety, so that all the tasks they perform are done safely,” he responds, before adding: “We could say that we are working on a core ethical requirement.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.