Will Community Notes, Elon Musk’s tool to combat misinformation, turn into a polarized battleground?

The open-source algorithm enables users to collaboratively add context to potentially misleading tweets

“Community Notes are the best thing that’s happened to Twitter,” wrote one user. Another posted, “I’ve been feeling pretty disgusted with Twitter lately, but Community Notes has given me a reason to get involved again!” One user said, “Community Notes are the best thing Twitter has come up with in years. Who doesn’t love seeing a post that was immediately spotted as an outright lie?”

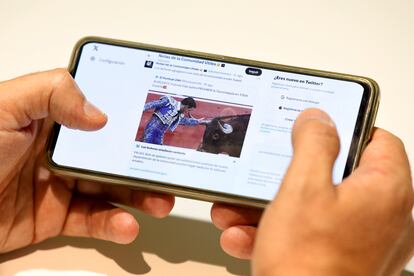

It’s easy to find opposing views about anything posted on X (formerly Twitter). But people have been mostly praising the new Community Notes, a moderation system where users can rate posts and offer context for them. The idea originated in 2021 with Twitter co-founder Jack Dorsey and was initially known as Birdwatch. When Musk bought the company, he became a big supporter and developed the recently released tool. Musk’s own account has been moderated through Community Notes, which the company says apply equally to all accounts on the platform without exception, including world leaders and large advertisers. Like Wikipedia, it entrusts a group of registered users with the task of minimizing misinformation on the internet.

In Europe, Community Notes was first tested and released in Spain, followed by France, Germany, Italy, the Netherlands and Belgium. As of early September, all 23 European countries have access to the tool. Registration is open in several Latin American countries, but the system is not yet fully functional there.

The volume of Community Notes is still low and mainly focuses on viral content or popular accounts. X has a Helpful Community Notes page for every major language. While the English-language page has 4,000 posts, the Spanish one only has 251. X users have posted notes about Spanish politician Alberto Núñez Feijóo’s sexist remarks about an opponent, a Mexican journalist who exaggerated the danger of water discharged by the Fukushima nuclear power plant in Japan, and the controversy surrounding Spanish soccer federation leader Luis Rubiales’ inappropriate behavior following the nation’s recent World Cup win. Traditional media haven’t been immune, and X users have posted notes about widely shared fake news, like how an animal’s flatulence helped it escape the jaws of a fleet-footed cheetah.

Community Notes is certain to turn into a new political and cultural battleground. There are plenty of platform users begging for notes about posts they don’t like because of the undeniable pleasure in watching a rival get battered on X by hundreds or thousands of notes. But the Community Notes algorithm is more complex than it seems. Registered users have to evaluate suggested notes written by others by and give the reasons for their assessment.

“The process involves rating whether a note is helpful by applying a set of criteria,” said user Daurmith (who prefers not to give her real name). “A note is considered helpful if it uses high-quality sources, provides important context and uses neutral language. A note can be rated unhelpful based on criteria like using biased language, failure to cite sources and other reliability factors.”

The rating system also prevents editors from getting into arguments. “You can’t turn the system into a war zone because when I evaluate someone’s note, they can’t see my rating,” said Spanish streamer and registered X user Andrea Sanchis. Editors are anonymous — a username on X is different from the name on the Community Notes editing platform. To become a member of this court of opinion, simply provide a phone number and complete a short form. Plus, there’s no need to pay the monthly X subscription.

Sometimes things can get complicated. EL PAÍS came across comments about a posted video related to the Luis Rubiales controversy. The contentious post produced seven suggested notes (none have been approved yet), followed by another six explanations for why a note was unnecessary. One person argued against adding context and said, “The video is clear, and provides the necessary context. No note required. Viewers can form their own opinions, but the information and video has not been manipulated.” The NNN (No Note Needed) acronym is sure to appear more and more frequently in the Community Notes system. Ultimately, X’s algorithm weighs votes from other users to decide whether to post a note for all users to see.

X publishes only the content that has received enough upvotes from users, who typically have opposing ratings. This practice aims to promote ideological diversity and protect against partisan or trolling efforts. Even after publication, anyone, including unregistered users, can continue to rate the content. It is not uncommon for a previously published piece to be eventually removed.

Since registered users are not obligated to vote on everything, there are significantly more unpublished notes than the select few in circulation. “Just looking at it, I’d say only about 5% to 10% of the notes actually get shared with the public,” said Manuel Herrador, a professor at the University of A Coruña (Spain) and registered X user.

Can it help control disinformation?

How beneficial is a system like this for controlling disinformation? One positive aspect is that it contributes to collective knowledge. “Collective moderation and contextual information are valuable tools for capturing quality information and effectively identifying disinformation,” said Sílvia Majó, a professor at Vrije University (Amsterdam) and a researcher with the Oxford University’s Reuters Institute for the Study of Journalism. “Collaboration platforms for programmers like Stack Overflow work like this. The approach of contributing collective knowledge and organizing it contextually is a valuable tool for producing high-quality content.”

Wikipedia exemplifies the remarkable success of collective knowledge facilitated by the internet. However, can real-time information platforms effectively adopt this format? It’s not an easy task. Typically, notes appear hours or days after the original posts. “I think it is a good solution for a platform like X,” says Àlex Hinojo, a Wikipedia editor. “But you need a solid group of volunteers. It’s all about how they divide up volunteer participation based on languages and topics, and whether they make it easy for newcomers to join in.” But can it turn into a useless, polarized battleground? “Yes, of course. It’s tough to find the right balance,” said Hinojo.

♥️♥️ @CommunityNotes ♥️♥️

— Elon Musk (@elonmusk) April 2, 2023

“The whole process can be pretty discouraging,” said Herrador. “You often end up grading a bunch of notes that never see the light of day, or some that have no tangible outcomes. Given how demotivating this selfless task is, I don’t really see a future for it. So, it’s quite likely that special interest groups will eventually end up taking control.” Computer scientist Míchel González, another Community Notes editor we consulted for this article, says the altruism and effort involved are positive things. “Well, that’s what they said about Wikipedia at the beginning. It’s a prime example of how democratization prevents pressure groups from controlling information.”

Editing conflicts on Wikipedia happen behind the scenes, while on X, votes and notes are constantly appearing and disappearing. The official Community Notes account recently addressed the issue directly. “We updated the scoring algorithm to reduce note ‘flipping’ — notes appearing and then later disappearing as they receive a larger and potentially more representative set of ratings. We updated the scoring algorithm to reduce grades that appear and then disappear as they receive a larger and potentially more representative set of grades.”

Fired moderators

Twitter used to have a large moderation team that would label, sanction and delete tweets. But much of the team was fired or left when Musk bought the company. Musk wants to transfer moderation responsibilities to users, but Sílvia Majó thinks this won’t be easy. “Professional moderation by humans is crucial. While automated moderation is getting better, so are disinformation strategies, making it challenging to foresee the future.”

Majó pointed to the Meta Advisory Council, which deals with highly sensitive matters, and cited Musk’s well-known reluctance to accept external advice. The Meta Advisory Council’s response times are also frustratingly slow. The EU has already issued warnings emphasizing the need to carefully consider the new directive that regulates digital services. “Twitter leaves EU voluntary Code of Practice against disinformation. But obligations remain. You can run but you can’t hide,” tweeted EU Commissioner Thierry Breton.

Musk’s enthusiasm for Community Notes aligns with the increasing tendency of others to neglect moderating their platforms. “Platforms are increasingly removing political content altogether,” said Majó. A scientific article by Purdue University (Indiana) researchers analyzed the system when it was still called Birdwatch. The study found fewer instances of misinformation, but also observed reduced activity. This decrease in activity might be attributed to users’ hesitation in retweeting or amplifying messages that could potentially be “punished” by corrections from others. These findings “suggest a higher level of performance in misinformation monitoring and, hence, improved average content quality... The launch of the program decreases the number of tweets created, shortens the average length of tweets, and discourages users from resharing other users’ content, which points to a cost of a lower volume of users’ content generation,” the authors of the study wrote.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.